David Kurtz

Or then again they might not! The non-PeopleSoft Oracle stuff is at blog.go-faster.co.uk.David Kurtzhttp://www.blogger.com/profile/00468908370233805717noreply@blogger.comBlogger174125

Enabling Cursor Sharing in PeopleSoft Processes

One of the challenges that PeopleSoft gives to an Oracle database is that many processes dynamically generate many SQL statements. They usually have different literal values each time, some may also reference different non-shared instances of temporary records. Each statement must be fully parsed by the Oracle statements. That consumes CPU and takes time. Oracle has already recommended using bind variables instead of literal values for that reason.

Reusing AE StatementsIt would generally be better if the SQL used bind variables rather than literal values. In Application Engine, one option is to set the ReUseStatement attribute on the steps in question. Then bind variables in Application Engine remain bind variables in the SQL and are not converted to literals. This can reduce parse time (see Minimising Parse Time in Application Engine with ReUseStatement). However, this attribute is not set by default. This is partly for legacy PeopleTools reasons, and partly due to the pitfalls discussed below. Over the years, Oracle has got much better at setting this attribute where possible in delivered PeopleSoft application code. There are still many places where it could still be added. However, there are some considerations before we add it ourselves.

- When a customer sets the ReUseStatement attribute in the delivered code, it is a customisation that has to be migrated using Application Designer. It has to be maintained to ensure that subsequent releases and patches do not revert it.

- ReUseStatement cannot be introduced across the board, but only on steps that meet certain criteria. It doesn't work when dynamic code is generated with %BIND(…,NOQUOTES), or if a %BIND() is used in a SELECT clause. Worse, setting this attribute when it should not be can cause the application to function incorrectly. So each change has to be tested carefully.

If you can't remove the literal values in the SQL code, then another option is to introduce cursor sharing in Oracle. Essentially, all literals are converted to bind variables before the SQL is parsed, and thus statements that only differ in the literal values can be treated as the same statement. If the statement is still in the shared pool, then it is not fully reparsed and uses the same execution plan.

Oracle cautions against using cursor sharing as a long-term fix: "The best practice is to write sharable SQL and use the default of EXACT for CURSOR_SHARING… FORCE is not meant to be a permanent development solution."

I realise that I am now about to suggest doing exactly that, but only for specific processes, and never for the whole database. I have tested enabling cursor sharing at database level a few times and have never had a good experience.

Session Settings for Processes Executed on the Process SchedulerIt is easy to set a session setting for a specific process run on the PeopleSoft process scheduler. The first thing a process does is to set the status of its own request record to 7, indicating that it is processing.

A trigger can be created on this transition that will then be executed in the session of the process. I initially developed this technique to set other session settings for nVision reports. I introduced a database table to hold a list of the settings, and the trigger matches this metadata to the processes being run by up for 4 attributes: process type, process name, operation and run control.

- see Setting Oracle Session Parameters for Specific Process Scheduler Processes

- The scripts are available on GitHub

- Trigger: set_prcs_sess_parm_trg.sql. The trigger expects that psftapi.sql has also been installed.

- Example metadata set_prcs_sess_parm.sql

CREATE OR REPLACE TRIGGER sysadm.set_prcs_sess_parm

BEFORE UPDATE OF runstatus ON sysadm.psprcsrqst

FOR EACH ROW

FOLLOWS sysadm.psftapi_store_prcsinstance

WHEN (new.runstatus = 7 AND old.runstatus != 7 AND new.prcstype != 'PSJob')

DECLARE

l_cmd VARCHAR2(100 CHAR);

…

BEGIN

FOR i IN (

WITH x as (

SELECT p.*

, row_number() over (partition by param_name

order by NULLIF(prcstype, ' ') nulls last, NULLIF(prcsname, ' ') nulls last,

NULLIF(oprid , ' ') nulls last, NULLIF(runcntlid,' ') nulls last) priority

FROM sysadm.PS_PRCS_SESS_PARM p

WHERE (p.prcstype = :new.prcstype OR p.prcstype = ' ')

AND (p.prcsname = :new.prcsname OR p.prcsname = ' ')

AND (p.oprid = :new.oprid OR p.oprid = ' ')

AND (p.runcntlid = :new.runcntlid OR p.runcntlid = ' '))

SELECT * FROM x WHERE priority = 1

) LOOP

…

IF NULLIF(i.parmvalue,' ') IS NOT NULL THEN

l_cmd := 'ALTER SESSION '||i.keyword||' '||l_delim||i.param_name||l_delim||l_op||i.parmvalue;

EXECUTE IMMEDIATE l_cmd;

END IF;

END LOOP;

EXCEPTION

WHEN OTHERS THEN …

END;

/The first delivered program that was a candidate for cursor sharing was GLPOCONS (GL Consolidations process). All that is necessary is to insert the relevant metadata, and it will apply the next time the process starts. Anything you can set with an ALTER SESSION command can be put in the metadata. At times, other settings have been defined, hence the insert statement is written in this way.

INSERT INTO sysadm.ps_prcs_sess_parm (prcstype, prcsname, oprid, runcntlid, keyword, param_name, parmvalue)

with x as (

select 'inmemory_query' param_name, 'SET' keyword, 'DISABLE' parmvalue from dual --Disable inmemory

union all select 'cursor_sharing' , 'SET' keyword, 'FORCE' from dual --to mitigate excessive parse

), y as (

select prcstype, prcsname, ' ' oprid, ' ' runcntlid

from ps_prcsdefn

where prcsname IN('GLPOCONS')

)

select y.prcstype, y.prcsname, y.oprid, y.runcntlid, x.keyword, x.param_name, x.parmvalue

from x,y

/In PeopleSoft, some Application Engine programs are executed by other programs. For example, the General Ledger Revaluation process (FSPCCURR) and (GLPOCONS), will directly invoke the Journal Edit and Budget Check process (GL_JEDIT2) for each journal that needs to be edited. GL_JEDIT2 inherits the process instance of the FSPCCURR process that invoked it, but there is no process scheduler request record for it to update, so the trigger technique described above does not work.

A different approach, specific to GL_JEDIT2 is required. The first thing GL_JEDIT2 does is write the current process instance number onto the JRNL_LN records it is working on.

UPDATE PS_JRNL_LN SET JRNL_LINE_STATUS='0', PROCESS_INSTANCE=:1

WHERE BUSINESS_UNIT=:2 AND JOURNAL_ID=:3 AND JOURNAL_DATE=TO_DATE(:4,'YYYY-MM-DD') AND UNPOST_SEQ=0The update statement may update many rows, but I only want to enable cursor sharing once. Therefore I have created a compound trigger.

- The trigger only fires when a statement updates PS_JRN_LN.PROCESS_INSTANCE from a zero to a non-zero value.

- The after statement section executes once after the update statement completes. This will contain the logic that checks the setting of module to verify that this is a GL_JEDIT2 process and that the current process instance is a process that is currently executing. It also enhances the value of the MODULE setting with the process name and instance; thus making it possible to determine which GL_JEDIT2 process was invoked by which parent process. Finally, it enables cursor sharing for the current session. However, the after statement section cannot read the data values being updated.

- Therefore an after row section is needed to collect the process instance. It fires for each row being updated. It is as minimal as possible to avoid adding overhead to the update statement. It copies the updated value of PROCESS_INSTANCE to a global PL/SQL variable, and nothing else. The variable value can then be read in the after statement section.

- The dbms_output commands are left over from testing and have been commented out in the final trigger.

CREATE OR REPLACE TRIGGER gfc_jrnl_ln_gl_jedit2

FOR UPDATE OF process_instance ON ps_jrnl_ln

WHEN (new.process_instance != 0 and old.process_instance = 0)

COMPOUND TRIGGER

l_process_instance INTEGER;

l_runcntlid VARCHAR2(30);

l_module VARCHAR2(64);

l_action VARCHAR2(64);

l_prcsname VARCHAR2(12);

l_cursor_sharing CONSTANT VARCHAR2(64) := 'ALTER SESSION SET cursor_sharing=FORCE';

AFTER EACH ROW IS

BEGIN

l_process_instance := :new.process_instance;

--dbms_output.put_line('process_instance='||l_process_instance);

END AFTER EACH ROW;

AFTER STATEMENT IS

BEGIN

IF l_process_instance != 0 THEN

dbms_application_info.read_module(l_module,l_action);

--dbms_output.put_line('module='||l_module||',action='||l_action);

IF l_module like 'PSAE.GL_JEDIT2.%' THEN --check this session is instrumented as being GL_JEDIT

--check process instance being set is a running FSPCCURR process

SELECT prcsname, runcntlid

INTO l_prcsname, l_runcntlid

FROM psprcsrqst

WHERE prcsinstance = l_process_instance AND runstatus = '7';

l_module := regexp_substr(l_module,'PSAE\.GL_JEDIT2\.[0-9]+',1,1)||':'||l_prcsname||':PI='||l_process_instance||':'||l_runcntlid;

dbms_application_info.set_module(l_module,l_action);

--dbms_output.put_line('set module='||l_module||',action='||l_action);

EXECUTE IMMEDIATE l_cursor_sharing;

--dbms_output.put_line('set cursor_sharing');

END IF;

END IF;

EXCEPTION

WHEN NO_DATA_FOUND THEN

--dbms_output.put_line('Cannot find running '||l_prcsname||' process instance '||l_process_instance);

NULL; --cannot find running process instance number

WHEN OTHERS THEN

--dbms_output.put_line('Other Error:'||sqlerrm);

NULL;

END AFTER STATEMENT;

END gfc_jrnl_ln_gl_jedit2;

/Configuring Shared Global Area (SGA) in a Multitenant Database with a PeopleSoft Pluggable Database (PDB)

I have been working on a PeopleSoft Financials application that we have converted from a stand-alone database to be the only pluggable database (PDB) in an Oracle 19c container database (CDB). We have been getting shared pool errors in the PDB that lead to ORA-4031 errors in the PeopleSoft application.

I have written a longer version of this article on my Oracle blog, but here are the main points.

SGA Management with a Parse Intensive System (PeopleSoft).PeopleSoft systems dynamically generate lots of non-shareable SQL code. This leads to lots of parse and consumes more shared pool. ASMM can respond by shrinking the buffer cache and growing the shared pool. However, this can lead to more physical I/O and degrade performance and it is not beneficial for the database to cache dynamic SQL statements that are not going to be executed again. Other parse-intensive systems can also exhibit this behaviour.

In PeopleSoft, I normally set DB_CACHE_SIZE and SHARED_POOL_SIZE to minimum values to stop ASMM shuffling too far in either direction. With a large SGA, moving memory between these pools can become a performance problem in its own right.

We removed SHARED_POOL_SIZE, DB_CACHE_SIZE and SGA_MIN_SIZE settings from the PDB. The only SGA parameters set at PDB level are SGA_TARGET and INMEMORY_SIZE.

SHARED_POOL_SIZE and DB_CACHE_SIZE are set as I usually would for PeopleSoft, but at CDB level to guarantee a minimum buffer cache size.

This is straightforward when there is only one PDB in the CDB. I have yet to see what happens when I have another active PDB with a non-PeopleSoft system and a different kind of workload that puts less stress on the shared pool and more on the buffer cache.

Initialisation Parameters- SGA_TARGET "specifies the total size of all SGA components". Use this parameter to control the memory usage of each PDB. The setting at CDB must be at least the sum of the settings for each PDB.

- Recommendations:

- Use only this parameter at PDB level to manage the memory consumption of the PDB.

- In a CDB with only a single PDB, set SGA_TARGET to the same value at CDB and PDB levels.

- Therefore, where there are multiple PDBs, SGA_TARGET at CDB level should be set to the sum of the setting for each PDB. However, I haven't tested this yet.

- There is no recommendation to reserve SGA for use by the CDB only, nor in my experience is there any need so to do.

- SHARED_POOL_SIZE sets the minimum amount of shared memory reserved to the shared pool. It can optionally be set in a PDB.

- Recommendation: However, do not set SHARED_POOL_SIZE at PDB level. It can be set at CDB level.

- DB_CACHE_SIZE sets the minimum amount of shared memory reserved to the buffer cache. It can optionally be set in a PDB.

- Recommendation: However, do not set DB_CACHE_SIZE at PDB level. It can be set at CDB level.

- SGA_MIN_SIZE has no effect at CDB level. It can be set at PDB level at up to half of the manageable SGA

- Recommendation: However, do not set SGA_MIN_SIZE.

- INMEMORY_SIZE: If you are using in-memory query, this must be set at CDB level in order to reserve memory for the in-memory store. The parameter defaults to 0, in which case in-memory query is not available. The in-memory pool is not managed by Automatic Shared Memory Management (ASMM), but it does count toward the total SGA used in SGA_TARGET.

- Recommendation: Therefore it must also be set in the PDB where in-memory is being used, otherwise we found(contrary to the documetntation) that the parameter defaults to 0, and in-memory query will be disabled in that PDB.

- A-04031 on Multitenant Database with Excessive Amounts of KGLH0 and / or SQLA Memory and Parameter SHARED_POOL_SIZE or SGA_MIN_SIZE Set at the PDB Level (Doc ID 2590172.1) – December 2022, Updated April 2023

- This one says “Remove the PDB-level SHARED_POOL_SIZE and/or SGA_MIN_SIZE initialization parameters. The only SGA memory sizing parameter that Oracle recommends setting at the PDB level is SGA_TARGET.”

- About memory configuration parameter on each PDBs (Doc ID 2655314.1) – Nov 2023

- “As a best practice, please do not to set SHARED_POOL_SIZE and DB_CACHE_SIZE on each PDBs and please manage automatically by setting SGA_TARGET.”

- "This best practice is confirmed by development in Bug 30692720"

- Bug 30692720 discusses how the parameters are validated. Eg. "Sum(PDB sga size) > CDB sga size"

- Bug 34079542: "Unset sga_min_size parameter in PDB."

PSFT_PLAN: A Sample Oracle Database Resource Manager Plan for PeopleSoft

- If you never run out of CPU, then you have probably bought/rented/allocated/licensed too many CPUs.

- If you do run out of CPU, then you should use the database resource manager to prioritise the processes that are most important to the business.

- If you don't enable the resource manager, you will have less visibility of when you do run out of CPU.

- See also Go-Faster Blog: More Bang for your Buck in the Cloud with Resource Manager

_GROUP100% Oracle system processes. Defined automatically. 2PSFT

_GROUP100% Any process that connects to the database as either SYSADM (the default PeopleSoft owner ID) or PS has higher priority than other processes unless other rules apply. The online application (other than ad hoc query) falls into this category so that the online user experience is safeguarded before other PeopleSoft processes.

This includes remote call Cobol processes, but not remote call Application Engine that should be run in the component processor. 4BATCH

_GROUP100% Process scheduler processes, and processes run by the process schedulers 5NVISION

_GROUP100% nVision (NVSRUN) and nVision report book (RPTBOOK) processes 6PSQUERY

_ONLINE

_GROUP90%Ad hoc queries are allocated to one of three consumer groups with the same priority, but different CPU guarantees, comprising:

- on-line PS/Queries,

- nVision reports run through the PIA,

_BATCH

_GROUP9%

- PS/Queries run on the process scheduler using the PSQUERY application engine. A 4-hour maximum runtime limit is defined.

_GROUP1%

- nVision through the 3-tier nVision client

_GROUP1%Other low-priority processes LOW

_LIMITED

_GROUP1%Other low-priority processes, but whose maximum query time is limited. OTHER

_GROUPS1%All other processes. Defined automatically.

- Enable PeopleSoft instrumentation: Set EnableAEMonitoring=1 in ALL PeopleSoft application server and process scheduler domains so that PeopleSoft processes set MODULE and ACTION information in the session attributes (using DBMS_APPLICATION_INFO).

- PeopleTools PeopleBooks: Administration Tools -> Data Management -> Administering PeopleSoft Databases on Oracle -> Monitoring PeopleSoft MODULE and ACTION Information, Press Enter to collapse

- PeopleSoft DBA Blog: Undocumented (until PeopleTools 8.55) Application Engine Parameter: EnableAEMonitoring

- PeopleSoft DBA Blog: PeopleTools 8.50 uses DBMS_APPLICATION_INFO to Identify Database Sessions

- Go-Faster Blog: One of my Favourite Database Things: DBMS_APPLICATION_INFO

- Install instrumentation trigger for PeopleSoft (psftapi.sql). Not all PeopleSoft processes are instrumented. COBOL, SQR, and nVision do not set MODULE or ACTION. When a PeopleSoft process is started by the process scheduler, the first thing it does is set its own status to 7, meaning that it is processing. This script creates a database trigger that fires on that DML and sets the session attributes MODULE to the name of the process and ACTION to the process instance number. Application Engine processes may then subsequently update these values again.

Group

Priority Consumer Group 2MODULE_ACTIONQUERY_MANAGER.QUERY_VIEWER6PSQUERY_ONLINE_GROUP 3MODULERPTBOOK

NVSRUN5NVISION_GROUP PSQRYSRV%6PSQUERY_ONLINE_GROUP PSAE.PSQUERY.%6PSQUERY_BATCH_GROUP 4CLIENT_PROGRAMPSRUNRMT2PSFT_GROUP psae%

PSAESRV%

PSDSTSRV%

PSMSTPRC%

PSRUN@%

PSSQR%

pssqr%

sqr%4BATCH_GROUP PSQRYSRV%6PSQUERY_ONLINE_GROUP PSNVSSRV%6NVSRUN_GROUP SQL Developer

sqlplus%

Toad%8LOW_GROUP / LOW_LIMITED_GROUP 5ORACLE_USERPS

SYSADM2PSFT_GROUP

- psft_resource_plan_simple.sql creates the resource plan. This is intended to be a starting point to which either unwanted parts can be removed, or additional requirements can be added

- resource_plan_report.sql reports on all the resource plan metadata.

There are other resource manager options that are either not illustrated in the sample plan, or that are commented out. They may be worth considering in some situations.

- PeopleSoft does not use parallel query by default, but if you do use it, you may well want to limit which processes use how much parallelism. Consumer groups can specify a limit to the parallel query degree.

- If you use the resource plan to restrict the degree of parallelism, and you also plan to vary the number of CPUs in a cloud environment, then I suggest creating a resource plan for each number of CPUs and switch between the plans by changing the setting of the RESOURCE_MANAGER_PLAN parameter.

DBMS_RESOURCE_MANAGER.CREATE_PLAN_DIRECTIVE(

'PSFT_PLAN', 'NVISION_GROUP', 'nVision Reports.'

,mgmt_p5 => 100

,parallel_degree_limit_p1=>2

);

- A parallel query may queue waiting to obtain sufficient parallel query server processes. A timeout can be specified to limit that wait and to determine the behaviour when the timeout is reached. The query can either be cancelled raising error ORA-07454, or run at a reduced parallelism).

DBMS_RESOURCE_MANAGER.CREATE_PLAN_DIRECTIVE(

'PSFT_PLAN', 'PSQUERY_ONLINE_GROUP'

,mgmt_p6 => 90

,parallel_queue_timeout=>900

,pq_timeout_action=>'RUN'

);

- A consumer group can restrict queries that run for a long time, or that are expected to run for a long time based on their optimizer cost. They can be switched to the CANCEL_SQL group after a number of seconds and they will terminate with ORA-00040: active time limit exceeded - call aborted:. This has only specified for the LOW_LIMITED_GROUP, and the PSQUERY_BATCH_GROUP for scheduled queries because the message is captured by the process scheduler and logged. It has not been specified for PSQUERY_ONLINE_GROUP because this error is not handled well by the online application. Just the Oracle error message will be displayed to the user without further explanation, which is neither friendly nor helpful. Instead, there are PeopleSoft configuration options to limit query runtime.

DBMS_RESOURCE_MANAGER.CREATE_PLAN_DIRECTIVE(

'PSFT_PLAN', 'PSQUERY_BATCH_GROUP'

,mgmt_p6 => 1

,switch_group => 'CANCEL_SQL'

,switch_time => 14400

,switch_estimate => TRUE

,switch_for_call => TRUE

);

- Sometimes customers may have different priorities and different priorities at different times that cannot be satisfied by a single resource plan. In which case, different resource plans can be activated at different times by different scheduler windows.

- Oracle White Paper: Using Oracle Database ResourceManager

- Documentation: Oracle Resource Manager Enhancements in Oracle Database 10g

- Documentation: Oracle Resource Manager Enhancements in Oracle Database 12c Release 1 (12.1)

What PS/Query is that?

Sometimes, performance analysis will turn up a problem SQL query that is probably a PS/Query. However, I need to know which PS/Query it is should I wish to alter it or talk to the user who wrote it.

Is it a PS/Query?It is quite easy to spot SQL queries that are generated from queries defined in the PS/Query tool. These are typical characteristics:

- Single character row source aliases (eg. A, B, D)

- The same row source with a suffix 1 (eg. D1) for query security records.

- Effective date/sequence subqueries are always correlated back to the same table.

- Order by column position number rather than column names or aliases.

SELECT A.EMPLID, A.ATTENDANCE, A.COURSE, B.DESCR, D.NAME, A.SESSION_NBR,

TO_CHAR(A.STATUS_DT,'YYYY-MM-DD'),B.COURSE

FROM PS_TRAINING A, PS_COURSE_TBL B, PS_PERSONAL_DTA_VW D, PS_PERS_SRCH_QRY D1

WHERE D.EMPLID = D1.EMPLID

AND D1.ROWSECCLASS = 'HCDPALL'

AND ( A.COURSE = :1

AND A.ATTENDANCE IN ('S','W')

AND A.COURSE = B.COURSE

AND A.EMPLID = D.EMPLID )

The text of a PS/Query is not stored in the database. Instead, as with other objects in PeopleSoft, it is held as various rows in PeopleTools tables. The PSQRY% tables are used to generate the SQL on demand. We can query these tables to identify the query.

REM findqry.sql

REM (c)Go-Faster Consultancy 2012

SELECT a.oprid, a.qryname

FROM psqryrecord a

, psqryrecord b

, psqryrecord d

WHERE a.oprid = b.oprid

AND a.qryname = b.qryname

AND a.oprid = d.oprid

AND a.qryname = d.qryname

AND a.corrname = 'A'

AND a.recname = 'TRAINING'

AND b.corrname = 'B'

AND b.recname = 'COURSE_TBL'

AND d.corrname = 'D'

AND d.recname = 'PERSONAL_DTA_VW'

/

OPRID QRYNAME

------------------------------ ------------------------------

TRN002__SESSION_ROSTER

TRN003__COURSE_WAITING_LIST

Writing the query on PSQRYRECORD to find queries, which always is slightly different each time, is quite boring. So I have written a script that will dynamically generate the SQL to identify a PS/Query.

Start with a SQL_IDSQL_ID c3h6vf2w5fxgp

--------------------

SELECT …

FROM PSTREELEAF B, PSTREENODE C, PS_OPER_UNIT_TBL A, PS_PRODUCT_TBL G

…

UNION SELECT …

FROM PSTREENODE D,PS_TREE_NODE_TBL E, PSTREELEAF F

…

--------------------------------------------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes |TempSpc| Cost (%CPU)| Time |

--------------------------------------------------------------------------------------------------------------------------

| * 7 | INDEX STORAGE FAST FULL SCAN | PSBPSTREELEAF | 426K| 19M| | 1178 (1)| 00:00:01 |

| 10 | TABLE ACCESS BY INDEX ROWID BATCHED| PS_PRODUCT_TBL | 1 | 41 | | 3 (0)| 00:00:01 |

| * 11 | INDEX RANGE SCAN | PS_PRODUCT_TBL | 1 | | | 2 (0)| 00:00:01 |

| * 14 | INDEX RANGE SCAN (MIN/MAX) | PS_PRODUCT_TBL | 1 | 21 | | 2 (0)| 00:00:01 |

| * 15 | TABLE ACCESS STORAGE FULL | PSTREENODE | 135K| 5709K| | 663 (1)| 00:00:01 |

| * 17 | INDEX STORAGE FAST FULL SCAN | PS_OPER_UNIT_TBL | 1791 | 35820 | | 4 (0)| 00:00:01 |

| * 20 | INDEX RANGE SCAN (MIN/MAX) | PS_PSTREENODE | 1 | 33 | | 3 (0)| 00:00:01 |

| * 23 | INDEX RANGE SCAN (MIN/MAX) | PSAPSTREELEAF | 1 | 32 | | 3 (0)| 00:00:01 |

| * 26 | INDEX RANGE SCAN (MIN/MAX) | PS_OPER_UNIT_TBL | 1 | 20 | | 2 (0)| 00:00:01 |

| 33 | TABLE ACCESS INMEMORY FULL | PS_TREE_NODE_TBL | 35897 | 1647K| | 6 (0)| 00:00:01 |

| * 35 | TABLE ACCESS STORAGE FULL | PSTREENODE | 167K| 9670K| | 663 (1)| 00:00:01 |

|- * 36 | INDEX RANGE SCAN | PS_PSTREELEAF | 1 | 39 | | 1267 (1)| 00:00:01 |

| 37 | INDEX STORAGE FAST FULL SCAN | PS_PSTREELEAF | 480K| 17M| | 1267 (1)| 00:00:01 |

| * 40 | INDEX RANGE SCAN (MIN/MAX) | PS_PSTREENODE | 1 | 33 | | 3 (0)| 00:00:01 |

| * 43 | INDEX RANGE SCAN (MIN/MAX) | PS_TREE_NODE_TBL | 1 | 28 | | 2 (0)| 00:00:01 |

--------------------------------------------------------------------------------------------------------------------------

Query Block Name / Object Alias (identified by operation id):

-------------------------------------------------------------

…

7 - SEL$1 / B@SEL$1

10 - SEL$1 / G@SEL$1

11 - SEL$1 / G@SEL$1

…

15 - SEL$1 / C@SEL$1

17 - SEL$1 / A@SEL$1

…

33 - SEL$6 / E@SEL$6

35 - SEL$6 / D@SEL$6

36 - SEL$6 / F@SEL$6

37 - SEL$6 / F@SEL$6

…

I use this query on DBA_HIST_SQL_PLAN to extract the tables that have single-character row source aliases that correspond to PeopleSoft records, and put them into PLAN_TABLE. I use this table because it is delivered by Oracle as a global temporary table, so it is always there and I can make use of it even if I only have read-only access.

INSERT INTO plan_table (object_name, object_alias)

with p as ( --plan lines with single letter aliases

SELECT DISTINCT object_owner, object_type, object_name, regexp_substr(object_alias,'[[:alpha:]]',2,1) object_alias

from dba_hist_sql_plan p

, ps.psdbowner d

where p.sql_id = '&&sql_id' --put SQL ID here--

and p.object_name IS NOT NULL

and p.object_owner = d.ownerid

and regexp_like(object_alias,'"[[:alpha:]]"') --single character aliases

), r as ( --PeopleSoft table records and the table name

select r.recname, DECODE(r.sqltablename,' ','PS_'||r.recname,r.sqltablename) sqltablename

from psrecdefn r

where r.rectype = 0 --PeopleSoft table records

)

select r.recname, object_alias --referenced table

from p, r

where p.object_type like 'TABLE%'

and p.object_name = r.sqltablename

union --a query plan may reference an index and not the table

select r.recname, object_alias --table for referenced index

from p, r

, all_indexes i

where p.object_type like 'INDEX%'

and i.index_name = p.object_name

and i.owner = p.object_owner

and i.table_name = r.sqltablename

order by 2,1

/

RECNAME O

--------------- -

OPER_UNIT_TBL A

PSTREELEAF B

PSTREENODE C

PSTREENODE D

TREE_NODE_TBL E

PSTREELEAF F

PRODUCT_TBL G

Next, I can run this anonymous PL/SQL block to dynamically build the SQL query on PSQRYRECORD (one reference for every table) and execute it to find the matching PS/Queries

DECLARE

l_sep1 VARCHAR2(20);

l_sep2 VARCHAR2(20);

l_counter INTEGER := 0;

l_sql CLOB := 'SELECT r1.oprid, r1.qryname';

l_where CLOB;

TYPE t_query IS RECORD (oprid VARCHAR2(30), qryname VARCHAR2(30));

TYPE a_query IS TABLE OF t_query INDEX BY PLS_INTEGER;

l_query a_query;

BEGIN

FOR i IN(

SELECT *

FROM plan_table

ORDER BY object_alias

) LOOP

l_counter := l_counter + 1;

dbms_output.put_line(i.object_alias||':'||i.object_name);

IF l_counter = 1 THEN

l_sep1 := ' FROM ';

l_sep2 := ' WHERE ';

ELSE

l_sep1 := ' ,';

l_sep2 := ' AND ';

l_where := l_where||' AND r1.oprid = r'||l_counter||'.oprid AND r1.qryname = r'||l_counter||'.qryname';

END IF;

l_sql := l_sql||l_sep1||'psqryrecord r'||l_counter;

l_where := l_where||l_sep2||'r'||l_counter||'.corrname = '''||i.object_alias||''' AND r'||l_counter||'.recname = '''||i.object_name||'''';

END LOOP;

l_sql := l_sql||l_where||' ORDER BY 1,2';

dbms_output.put_line(l_sql);

EXECUTE IMMEDIATE l_sql BULK COLLECT INTO l_query;

FOR indx IN 1 .. l_query.COUNT

LOOP

DBMS_OUTPUT.put_line (indx||':'||l_query(indx).oprid||'.'||l_query(indx).qryname);

END LOOP;

END;

/

The seven records found in my execution plan become a query of PSQRYRECORD 7 times, one for each record, joined on operator ID and query name.

SELECT r1.oprid, r1.qryname

FROM psqryrecord r1 ,psqryrecord r2 ,psqryrecord r3 ,psqryrecord r4 ,psqryrecord r5 ,psqryrecord r6 ,psqryrecord r7

WHERE r1.corrname = 'A' AND r1.recname = 'OPER_UNIT_TBL'

AND r1.oprid = r2.oprid AND r1.qryname = r2.qryname AND r2.corrname = 'B' AND r2.recname = 'PSTREELEAF'

AND r1.oprid = r3.oprid AND r1.qryname = r3.qryname AND r3.corrname = 'C' AND r3.recname = 'PSTREENODE'

AND r1.oprid = r4.oprid AND r1.qryname = r4.qryname AND r4.corrname = 'D' AND r4.recname = 'PSTREENODE'

AND r1.oprid = r5.oprid AND r1.qryname = r5.qryname AND r5.corrname = 'E' AND r5.recname = 'TREE_NODE_TBL'

AND r1.oprid = r6.oprid AND r1.qryname = r6.qryname AND r6.corrname = 'F' AND r6.recname = 'PSTREELEAF'

AND r1.oprid = r7.oprid AND r1.qryname = r7.qryname AND r7.corrname = 'G' AND r7.recname = 'PRODUCT_TBL'

ORDER BY 1,2

NB. You can only open public queries (where OPRID is a single space) or your own private queries. In the Query Manager, you cannot see a private query owned by another user.

…

3: .PS_TREE_PRODUCT

4: .QUERY_PRODUCT_TREE

5: .RM_TREE_PRODUCT

6:XXXXXX.PS_TREE_PRODUCT_XX

…

Reducing the Operating System Priority of PeopleSoft Processes

I wrote about controlling the operating system priority of processes in PeopleSoft Tuxedo domains in Chapters 13 of 14 of PeopleSoft for the Oracle DBA, but I think it is worth a note here.

On Linux and Unix systems, the nice command can be used to lower the operating system scheduling priority of a process (or a privileged can increase the priority). When a server has no free CPU, processes with a lower priority get less time on the CPU. However, when there is free CPU available, the scheduling priority does not affect the amount of CPU that the process can utilise.

On Unix, the priority of a Tuxedo server process can be adjusted using the -n server command line option in the configuration. The parameters to this option are simply passed through to the nice(2) function. Hence, this option does not work on Windows.

PSPRCSRV SRVGRP=BASE

SRVID=101

MIN=1

MAX=1

RQADDR="SCHEDQ"

REPLYQ=Y

CLOPT="-n 4 -sInitiateRequest -- -C psprcs.cfg -CD HR88 -PS PSUNX -A start -S PSPRCSRV"- Therefore Stand-alone Application Engine processes (psae) and Cobol processes inherit the priority of the process scheduler server process (PSPRCSRV).

- However, if the Application Engine server process (PSAESRV) is used, its priority can be set directly.

- If the process scheduler is co-resident with the application server, then it could be run at a lower priority to ensure the online users get preferential allocation of CPU, and that online performance does not suffer excessively at the hands of the batch.

- A system might have two websites: one for self-service and the other for the 'back-office' users. You could configure separate application servers for each site, and run the self-service application server is run at a lower priority.

In PeopleSoft, I prefer to create additional variables in the configuration file (psprcs.cfg).

[Process Scheduler]

;=========================================================================

; General settings for the Process Scheduler

;=========================================================================

PrcsServerName=PSUNX

;-------------------------------------------------------------------------

;Reduce priority of Process Scheduler server process, set to 0 if not needed

Niceness=4

...[PSAESRV]

;=========================================================================

; Settings for Application Engine Tuxedo Server

;=========================================================================

;-------------------------------------------------------------------------

;Reduce priority of application engine server process, set to 0 if not needed

Niceness=5

...{APPENG}

#

# PeopleSoft Application Engine Server

#

PSAESRV SRVGRP=AESRV

SRVID=1

MIN={$PSAESRV\Max Instances}

MAX={$PSAESRV\Max Instances}

REPLYQ=Y

CLOPT="-n {$PSAESRV\Niceness} -- -C {CFGFILE} -CD {$Startup\DBName} -S PSAESRV"

{APPENG}

...

PSPRCSRV SRVGRP=BASE

SRVID=101

MIN=1

MAX=1

RQADDR="SCHEDQ"

REPLYQ=Y

CLOPT="-n {$Process Scheduler\Niceness} -sInitiateRequest -- -C {CFGFILE} -CD {$Startup\DBName} -PS {$Process Scheduler\PrcsServerName} -A start -S PSPRCSRV"

#

# PeopleSoft Application Engine Server

#

PSAESRV SRVGRP=AESRV

SRVID=1

MIN=1

MAX=1

REPLYQ=Y

CLOPT="-n 5 -- -C psprcs.cfg -CD HR88 -S PSAESRV"

...

PSPRCSRV SRVGRP=BASE

SRVID=101

MIN=1

MAX=1

RQADDR="SCHEDQ"

REPLYQ=Y

CLOPT="-n 4 -sInitiateRequest -- -C psprcs.cfg -CD HR88 -PS PSUNX -A start -S PSPRCSRV"

Prioritising Scheduled Processes by Operator ID/Run Control

Batch processing is like opera (and baseball) - "It ain't over till the fat lady sings". Users care about when it starts and when it finishes. If the last process finishes earlier, then that is an improvement in performance.

This note describes a method of additionally prioritising processes queued to run on the process scheduler in PeopleSoft by their requesting operator ID and run control. Where processing consists of more instances of the same process than can run concurrently, it can be used to make the process scheduler run longer-running processes before shorter-running processes that were scheduled earlier, thus completing batch processing earlier.

In PeopleSoft, without customisation, it is only possible to prioritise processes queued to run on the process scheduler by assigning a priority to the process definition or their process category. Higher priority processes are selected to be run in preference to lower priorities. Otherwise, processes are run in the order of the time at which they are requested to run.

Problem Statement

- process_prioritisation_by_cumulative_runtime.sql - master script that creates metadata table and trigger and then:

- nvision_prioritisation_by_cumulative_runtime.sql - example script to create a procedure to populate metadata for nVision batch.

- gppdprun_prioritisation_by_cumulative_runtime.sql - example script to create a procedure to populate metadata for Payroll/Absence calculation batch.

- process_prioritisation_by_cumulative_runtime_test.sql - test trigger by inserting dummy data into process queue table.

- process_prioritisation_by_cumulative_runtime_report.sql - example of a report to compare median execution time with last execution time.

We need a table that will hold the priority for each combination of process type, process name, operation ID, and run control ID. A corresponding record should be created using the Application Designer project in the GitHub repository.

create table sysadm.ps_xx_gfcprcsprty

(prcstype VARCHAR2(30 CHAR) NOT NULL

,prcsname VARCHAR2(12 CHAR) NOT NULL

,oprid VARCHAR2(30 CHAR) NOT NULL

,runcntlid VARCHAR2(30 CHAR) NOT NULL

,prcsprty NUMBER NOT NULL

--------------------optional columns

,avg_duration NUMBER NOT NULL

,med_duration NUMBER NOT NULL

,max_duration NUMBER NOT NULL

,cum_duration NUMBER NOT NULL

,tot_duration NUMBER NOT NULL

,num_samples NUMBER NOT NULL

) tablespace ptapp;

create unique index sysadm.ps_xx_gfcprcsprty

on sysadm.ps_xx_gfcprcsprty(prcstype, prcsname, oprid, runcntlid)

tablespace psindex compress 3;As processes are scheduled in PeopleSoft, a row is inserted into the process scheduler queue table PSPRCSQUE. A trigger will be created on this table that fires after the insert. It will look for a matching row on the metadata table, PS_XX_GFCPRCSPRTY for the combination of process type, process name, operator ID, and run control ID. If found, the trigger will assign the specified priority to the process request. Otherwise, it will take no action.

CREATE OR REPLACE TRIGGER sysadm.psprcsque_set_prcsprty

BEFORE INSERT ON sysadm.psprcsque

FOR EACH ROW

WHEN (new.prcsname = 'RPTBOOK')

DECLARE

l_prcsprty NUMBER;

BEGIN

SELECT prcsprty

INTO l_prcsprty

FROM ps_xx_gfcprcsprty

WHERE prcstype = :new.prcstype

AND prcsname = :new.prcsname

AND oprid = :new.oprid

AND runcntlid = :new.runcntlid;

:new.prcsprty := l_prcsprty;

EXCEPTION

WHEN no_data_found THEN NULL;

WHEN others THEN NULL;

END;

/

show errorsIn this case, I am only assigning priorities to RPTBOOK processes, so I have added a when clause to the trigger so that it only fires for RPTBOOK process requests. This can either be changed for other processes or removed entirely.

Priority MetadataHow the priorities should be defined will depend on the specific use case. In some cases, you may choose to create a set of metadata that remains unchanged.

In this case, the objective is that the processes to take the longest to run should be executed first. Therefore, I decided that the priority of each nVision report book process (by operator ID and run control ID) will be determined by the median elapsed execution time in the last two months. The priorities are allocated such that the sum of the median execution times for each priority will be as even as possible.

I have created a PL/SQL procedure GFCPRCSPRIORITY to truncate the metadata table and then repopulate it using a query on the process scheduler table (although, an Application Engine program could have been written to do this instead). The procedure is executed daily, thus providing a feedback loop so if the run time varies over time, or new processes are added to the batch, it will be reflected in the priorities.

REM nvision_prioritisation_by_cumulative_runtime.sql

set serveroutput on

create or replace procedure sysadm.gfcprcspriority as

PRAGMA AUTONOMOUS_TRANSACTION; --to prevent truncate in this procedure affecting calling session

l_hist INTEGER := 61 ; --consider nVision processes going back this many days

begin

EXECUTE IMMEDIATE 'truncate table ps_xx_gfcprcsprty';

--populate priorty table with known nVision processes

insert into ps_xx_gfcprcsprty

with r as (

select r.prcstype, r.prcsname, r.prcsinstance, r.oprid, r.runcntlid, r.runstatus, r.servernamerun

, CAST(r.rqstdttm AS DATE) rqstdttm

, CAST(r.begindttm AS DATE) begindttm

, CAST(r.enddttm AS DATE) enddttm

from t, psprcsrqst r

inner join ps.psdbowner p on r.dbname = p.dbname -- in test exclude any history copied from another database

where r.prcstype like 'nVision%' --limit to nVision processes

and r.prcsname like 'RPTBOOK' -- limit to report books

and r.enddttm>r.begindttm --it must have run to completion

and r.oprid IN('NVISION','NVISION2','NVISION3','NVISION4') --limit to overnight batch operator IDs

and r.begindttm >= TRUNC(SYSDATE)+.5-l_hist --consider process going back l_hist days from midday today

and r.runstatus = '9' --limit to successful processes

and r.begindttm BETWEEN ROUND(r.begindttm)-5/24 AND ROUND(r.begindttm)+5/24 --started between 7pm and 5am

), x as (

select r.*, CEIL((enddttm-begindttm)*1440) duration -–rounded up to the next minute

from r

), y as (

select prcstype, prcsname, oprid, runcntlid

, AVG(duration) avg_duration

, MEDIAN(CEIL(duration)) med_duration

, MAX(duration) max_duration

, SUM(CEIL(duration)) sum_duration

, COUNT(*) num_samples

from x

group by prcstype, prcsname, oprid, runcntlid

), z as (

select y.*

, sum(med_duration) over (order by med_duration rows between unbounded preceding and current row) cum_duration

, sum(med_duration) over () tot_duration

from y

)

select prcstype, prcsname, oprid, runcntlid

, avg_duration, med_duration, max_duration, cum_duration, tot_duration, num_samples

--, CEIL(LEAST(tot_duration,cum_duration)/tot_duration*3)*4-3 prcsprty --3 priorities

, CEIL(LEAST(tot_duration,cum_duration)/tot_duration*9) prcsprty --9 priorities

--, DENSE_RANK() OVER (order by med_duration) prcsprty --unlimited priorities

from z

order by prcsprty, cum_duration;

dbms_output.put_line(sql%rowcount||' rows inserted');

commit;

end gfcprcspriority;

/

show errorsThis is the metadata produced on a test system by the above query. It will vary depending on what has been run recently, and how it performed. There are more, shorter processes in the lower priority groups, and fewer, longer processes in the higher priority groups.

PRCSTYPE PRCSNAME OPRID RUNCNTLID PRCSPRTY

------------------------------ ------------ ------------ ------------------------------ ----------

…

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_XXX1 1

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_XXX3 1

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_LLLL8 1

…

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_LLLL9 2

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_STAT8 2

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_STAT1 2

…

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_TEMPXX 6

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_3 6

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_17 6

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_STAT4 7

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_28 7

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_INCXXX 8

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_MORYYY1 8

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_24 9

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_16 9

This test script inserts some dummy rows into PSPRCSQUE to check whether a priority is assigned by the trigger. The insert is then rolled back.

INSERT INTO psprcsque (prcsinstance, prcstype, prcsname, oprid, runcntlid)

VALUES (-42, 'nVision-ReportBook', 'RPTBOOK', 'NVISION','NVS_RPTBOOK_17');

INSERT INTO psprcsque (prcsinstance, prcstype, prcsname, oprid, runcntlid)

VALUES (-43, 'nVision-ReportBook', 'RPTBOOK', 'NVISION','NVS_RPTBOOK_STAT1');

select prcsinstance, prcstype, prcsname, oprid, runcntlid, prcsprty from psprcsque where prcsinstance IN(-42,-43);

rollback;You can see that it was successful because priorities 2 and 7 were assigned.

PRCSINSTANCE PRCSTYPE PRCSNAME OPRID RUNCNTLID PRCSPRTY

------------ ------------------------------ ------------ ------------ ------------------------------ ----------

-43 nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_STAT1 2

-42 nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_17 7This query in script process_prioritisation_by_cumulative_runtime_report.sql reports on the average, median, and cumulative median execution time for each nVision process that ran to success during the overnight processing window as calculated by the package GFCPRCSPRIORITY and stored in PS_XX_GFCPRCSPRTY. It also compares that to the priority and last actual run time for that process.

Example Output Cum.

Average Median Median Total Last Run Actual

Prcs Duration Duration Duration Duration Duration Num Process Duration Duration Duration Priorty

PRCSTYPE PRCSNAME OPRID RUNCNTLID Prty (mins) (mins) (mins) (mins) (mins) Samples Priority (mins) Diff % Diff Diff

-------------------- ---------- ------------ -------------------- ---- -------- -------- -------- -------- -------- ------- -------- -------- -------- -------- -------

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_4 9 90.65 131 209 1834 1997 23 6 189 58 44 3

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_16 9 159.17 163 209 1997 1997 23 9 177 14 9 0

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_14 8 89.26 127 215 1703 1997 23 6 167 40 31 2

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_24 8 115.87 117 165 1576 1997 23 9 144 27 23 -1

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_MORYYY1 7 93.13 85 165 1459 1997 23 8 158 73 86 -1

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_28 7 88.30 80 172 1374 1997 23 8 108 28 35 -1

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_INCXXX 6 83.61 79 149 1294 1997 18 7 118 39 49 -1

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_17 6 70.96 69 105 1143 1997 23 7 81 12 17 -1

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_STAT4 6 68.00 72 81 1215 1997 8 7 81 9 13 -1

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_MMMMMM 5 52.45 46 119 914 1997 22 5 91 46 100 0

nVision-ReportBook RPTBOOK NVISION2 NVS_RPTBK_TEMPXX 5 50.48 49 104 963 1997 23 5 94 45 92 0

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_3 5 55.52 55 99 1018 1997 23 6 79 24 44 -1

nVision-ReportBook RPTBOOK NVISION NVS_RPTBOOK_1 5 47.70 56 137 1074 1997 23 4 56 0 0 1

…

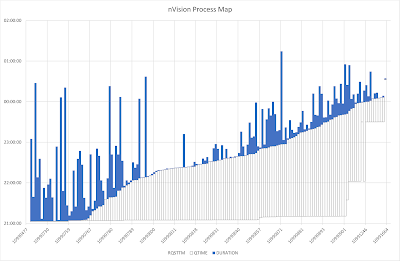

The query in prcsmap.sql is used to produce the data for a map of the processes, showing request time, time spent queuing, and time spent executing. It is the basis of the second chart above. I normally run this in SQL Developer and export the data as an Excel workbook. There is an example spreadsheet in the Github repository.

9 levels of PrioritisationWith prioritisation, we can see that the long-running jobs with higher priority ran earlier.Querying the PeopleSoft Message Log with SQL

It is easy to access the PeopleSoft message log in Process Monitor component, but it can be a little difficult to work with in SQL because it is stored in multiple tables.

This started when I wanted to generate a PeopleSoft log message as a single string of text, so I could investigate shared pool memory errors by searching for ORA-04031 errors. Ultimately, the string 'ORA-04031' is stored in PS_MESSAGE_LOGPARM, but I wanted to see the whole error message.

- Each process, has a request record on PSPRCSRQST, it can have many messages.

- Each message is stored in the message log table PS_MESSAGE_LOG

- The text of each message is stored in the message catalogue table PSMSGCATDEFN. It can have up to 9 substitution strings (%1, %2, etc).

- A message can have up to 9 parameters stored on PS_MESSAGE_LOGPARM that are substituted into the message string.

I assemble the message text in a PL/SQL function exactly as PeopleTools programs do, substituting the variables in the message string from the message catalogue with the parameter values. The PL/SQL function is put into the SQL query as a common table expression so that I don't have to create a function or package in the database. The function returns the full message text in a CLOB, thus I can then easily manipulate the message string in SQL.

In this case, I wrote a SQL query to search for ORA-04031 (see psmsglogora4031.sql on Github), but the same PL/SQL function can be used in various queries.

It can be slow to search the generated message string. It can be faster to search PS_MESSAGE_LOGPARM directly.

WITH FUNCTION psmsgtext(p_process_instance INTEGER, p_message_seq INTEGER) RETURN CLOB IS

l_message_log ps_message_log%ROWTYPE;

l_message_text CLOB;

BEGIN

SELECT *

INTO l_message_log

FROM ps_message_log

WHERE process_instance = p_process_instance

AND message_seq = p_message_seq;

SELECT message_text

INTO l_message_text

FROM psmsgcatdefn

WHERE message_set_nbr = l_message_log.message_set_nbr

AND message_nbr = l_message_log.message_nbr;

--dbms_output.put_line(l_message_text);

FOR i IN (

SELECT *

FROM ps_message_logparm

WHERE process_instance = p_process_instance

AND message_seq = p_message_seq

ORDER BY parm_seq

) LOOP

--dbms_output.put_line(i.message_parm);

l_message_text := REPLACE(l_message_text,'%'||i.parm_seq,i.message_parm);

END LOOP;

--and tidy up the unused replacements at the end

RETURN REGEXP_REPLACE(l_message_text,'%[1-9]','');

END;

x as (

select r.prcstype, r.prcsname, r.oprid, r.runcntlid

, l.*, psmsgtext(l.process_instance, l.message_seq) message_text

from ps_message_log l

LEFT OUTER JOIN psprcsrqst r ON r.prcsinstance = l.process_instance

WHERE …

)

select *

from x

ORDER BY dttm_stamp_sec

/

Process Process Operator Process Msg Msg Msg

Type Name ID Run Control Instance Seq JOBID PROGRAM_NAME Set# Msg# Sev DTTM_STAMP_SEC

-------------------- --------------- ---------- ---------------------- ---------- ---- --------------- --------------- ----- ----- ----- ----------------------------

MESSAGE_TEXT

------------------------------------------------------------------------------------------------------------------------------------------------------

nVision-ReportBook RPTBOOK VP1 NVS_XXXXXXX_99 1234567 1 PRCS SCHDL psprschd 65 70 0 01/04/2023 20.37.21

Process Request shows status of 'INITIATED' or 'PROCESSING' but no longer running

nVision-ReportBook RPTBOOK VP1 NVS_XXXXXXX_99 1234567 2 PRCS SCHDL psprschd 65 73 0 01/04/2023 20.37.23

PSNT1 failed to post files to the report repository. Server scheduled to try again on 2023-04-01-20.37.41.239539. See log

...Clearing PeopleTools Physical Cache Files after Database Restore or Database Flashback

I've written previously about how to clear the physical cache files on a PeopleTools process, but I have found myself explaining it a few times recently, so I am going to post about it again.

When you refresh the database of a PeopleSoft system, you need to clear the physical cache files of the PeopleTools processes. The files are outside the database and they no longer reflect what is inside the database. This includes all application servers and process schedulers and anywhere where Application Designer or a client process is used.

It is common to refresh a database when testing a PeopleSoft system. For example, to copy production to a performance test environment. It is also increasingly common to use Oracle database flashback during testing. A guaranteed restore point is taken, a test is performed, and then the database is flashed back to that restore point. Flashback returns the whole database not just to the same logical state, but also the same physical state. Block for block, the entire database is physically the same as when the restore point was taken. Thus a test is completely repeatable with the same initial conditions. Although the database instance will have been restarted during the flashback so the content of the database memory will have been cleared.

It is also common, after the flashback to then make small changes or corrections, take a new restore point and repeat the test. Some of those changes might include Application Designer projects that will then be loaded into the physical cache. Flashing the database back won't change the physical cache files stored outside the database, so they need to be cleared too. Otherwise, they may have higher version numbers than the objects in the database, and caching won't work correctly. When you retest, your changes may not be loaded and executed by PeopleTools processes.

The officially approved method is to go around each server and use either the purge option in the psadmin utility or manually delete the files. See:

- Oracle Support Note: E-WS: How to Delete / Purge Cache for the PeopleSoft Application (Doc ID 753215.1)

- PeopleBooks: System and Server Admin ► Using the Application Server Administration Menu

However, since at least PeopleTools 5, it has been possible to invalidate all physical cache files on all servers by updating the LASTREFRESHDTTM on the single row in table PSSTATUS. Any cached object older than the value of LASTREFRESHDTTM will be purged from the cache when the process that reference that cache is started. Therefore, if immediately after a restore or flashback that value is updated to the current system time, all caches will be purged as the processes are restarted.

UPDATE PSSTATUS

SET LASTREFRESHDTTM = SYSDATE

/

COMMIT

/Programmatically Suspending and Restarting the Process Scheduler

0=Error

1=Down

2=Suspended

3=Running

4=Purging

5=Running With No Report Node

6=Suspended - Disk Low

7=Suspended - Offline

8=Running - Report Rep. Full

9=Overloaded SERVERACTIONProcess Server Action

0=None

1=Stop

2=Suspended

3=Restart

4=Purge See PSSERVERSTAT.

- Stop

update psserverstat

set serveraction = 1 /*Stop*/

where serverstatus = 3 /*Running*/

and servername = ...

/

commit

/

- Suspend

update psserverstat

set serveraction = 2 /*Suspend*/

where serverstatus = 3 /*Running*/

and servername = ...

/

commit

/

- Restart (after suspension)

update psserverstat

set serveraction = 3 /*Restart*/

where serverstatus = 2 /*Suspended*/

and servername = ...

/

commit

/

- Startup (if the Tuxedo domain is running)

update psserverstat

set serveraction = 3

where servername = ...

/

commit

/

Oracle SQL Tracing Processes from Startup

Sometimes, ASH and AWR are not enough. SQL may not be sampled by ASH if it is short-lived, and even if it is sampled, the SQL may not be captured by AWR. Sometimes, in order to investigate a problem effectively, it is necessary to use database session SQL trace.

It is easy to trace a process initiated by the process scheduler with a trigger (see Enabling Oracle Database Trace on PeopleSoft processes with a Trigger).

Another tactic is to use an AFTER LOGON trigger with logic to look at the program name. The program name can be read using SYS_CONTEXT(). If it matches what I am looking for, I can enable session trace.

Here is an example I used for the OpenXML nVision server PSNVSSRV

- I want to trace SQL and not any wait events or bind variables. Therefore, I will set event 10046 at level 1.

- I also set a tracefile_identifier that will be included in the trace file name, so I can more easily identify the trace file.

REM additional SQL trace triggers

CREATE OR REPLACE TRIGGER sysadm.gfc_nvision_trace_on_logon

AFTER LOGON

ON sysadm.schema

DECLARE

l_process_instance INTEGER;

l_program VARCHAR2(64 CHAR);

l_sql VARCHAR2(100);

BEGIN

SELECT sys_context('USERENV', 'CLIENT_PROGRAM_NAME')

INTO l_program

FROM dual;

IF l_program like 'PSNVSSRV%' THEN --then this is a NVISION session

EXECUTE IMMEDIATE 'ALTER SESSION SET tracefile_identifier = ''PSNVSSRV''';

EXECUTE IMMEDIATE 'ALTER SESSION SET events ''10046 TRACE NAME CONTEXT FOREVER, LEVEL 1''';

END IF;

EXCEPTION

WHEN OTHERS THEN NULL;

END;

/

show errors

ALTER TRIGGER sysadm.gfc_nvision_trace_on_logon ENABLE;Adding Flags to Trace Level Overrides in Process Definitions

A trace level is set in a process definition in PS_PRCSDEFN precedence over a trace level set in the process scheduler configuration file (psprcs.cfg).

I often set the process scheduler trace level for Application Engine to 1152 to enable batch timings to both the database batch timings tables and the AE trace file, but then I often find that a trace is left enabled on a few processes to aid performance analysis of a troublesome process.

This script updates the trace level set in the parameter list in the process definition to include the bit flags set by 1152 (to enable batch timings).

- The current trace level is extracted with regular expression substring functions.

- A bitwise OR is performed between the current trace level and the desired settings. There is no single function to do this in Oracle SQL, but it can be calculated simply (see Oracle blog: There is no BITOR() in Oracle SQL).

- The old trace value is replaced with the new one in the parameter list with a regular expression replace function.

- The version number on the process definition is also updated as it would be if updated by the process definition component in the PIA. Thus it is correctly re-cached by the process scheduler, the scheduler does not need to be recycled, nor does the cache need to be cleared

The script is available on Github.

REM fixprcstracelevel.sql

set pages 99 lines 200 serveroutput on

spool fixprcstracelevel append

ROLLBACK;

DECLARE

l_counter INTEGER := 0;

l_trace_expr VARCHAR2(20); /*expression containing TRACE keyword and value*/

l_req_trace_level INTEGER := 1152; /*trace value set in the scheduler config*/

l_cur_trace_level INTEGER; /*current trace level*/

l_new_trace_level INTEGER; /*new calculated trace level*/

l_parmlist ps_prcsdefn.parmlist%TYPE;

BEGIN

for i in (

SELECT t.*

FROM ps_prcsdefn t

WHERE UPPER(t.parmlist) LIKE '%-%TRACE%'

AND prcstype LIKE 'Application Engine'

-- AND parmlisttype IN('1','2','3')

) LOOP

l_trace_expr := REGEXP_SUBSTR(i.parmlist,'\-trace[ ]*[0-9]+',1,1,'i');

l_cur_trace_level := TO_NUMBER(REGEXP_SUBSTR(l_trace_expr,'[0-9]+',1,1,'i'));

l_new_trace_level := l_req_trace_level+l_cur_trace_level-bitand(l_cur_trace_level,l_req_trace_level);

l_parmlist := REGEXP_REPLACE(i.parmlist,l_trace_expr,'-TRACE '||l_new_trace_level,1,1,'i');

IF l_new_trace_level = l_cur_trace_level THEN

dbms_output.put_line(i.prcstype||':'||i.prcsname||':'||i.parmlist||'=>No Change');

ELSE

l_counter := l_counter + 1;

IF l_counter = 1 THEN

UPDATE psversion

SET version = version+1

WHERE objecttypename IN('SYS','PPC');

UPDATE pslock

SET version = version+1

WHERE objecttypename IN('SYS','PPC');

END IF;

dbms_output.put_line(l_counter||':'||i.prcstype||' '||i.prcsname||':'||i.parmlist||'=>'||l_parmlist);

UPDATE ps_prcsdefn

SET version = (SELECT version FROM psversion WHERE objecttypename = 'PPC')

, parmlist = l_parmlist

WHERE prcstype = i.prcstype

AND prcsname = i.prcsname;

END IF;

END LOOP;

COMMIT;

END;

/

spool offApplication Engine:GL_JEDIT:-TRACE 1159=>No Change

1:Application Engine PTDEFSECINRL:-toolstacepc 2048 -toolstracesql 15 -TRACE 15=>-toolstacepc 2048 -toolstracesql 15 -TRACE 1167- TRACE for GL_JEDIT is already 1159, so no change is required.

- TRACE for PTDEFSECINRL was changed from 15 to 1167.

Reporting View Hierarchies

However, the Oracle database describes "dependencies between procedures, packages, functions, package bodies, and triggers accessible to the current user, including dependencies on views created without any database links" in the view ALL_DEPENDENCIES.

This hierarchical query (depend_heir.sql) on this view will report the structure of views within views.

REM depend_hier.sql

undefine view_name

set pages 999 lines 176 long 50000

break on name skip 1 on owner

ttitle 'Dependency Hierarchy'

column my_level format a5 heading 'Level'

column owner format a12

column name format a18

column type format a7

column referenced_type format a7 heading 'Refd|Type'

column referenced_owner format a6 heading 'Refd|Owner'

column referenced_name format a18 heading 'Refd|Name'

column referenced_link_name format a10 heading 'Refd|Link'

column dependency_type heading 'Dep|Type'

column text heading 'View Text' format a80 wrap on

spool depend_hier.&&view_name..lst

with d as (

select * from all_dependencies

union all

select null, null, null, owner, view_name, 'VIEW', null, null

from all_views

where owner = 'SYSADM' and view_name = UPPER('&&view_name')

)

select LPAD(TO_CHAR(level),level,'.') my_level

, d.type, d.owner, d.name

, d.referenced_type, d.referenced_owner, d.referenced_name, d.referenced_link_name

, d.dependency_type

, v.text

from d

left outer join all_views v

on v.owner = d.referenced_owner

and v.view_name = d.referenced_name

connect by nocycle

d.name = prior d.referenced_name

and d.owner = prior d.referenced_owner

start with d.owner IS NULL and d.name IS NULL

/

spool off

ttitle offWed Aug 11 page 1

Dependency Hierarchy

Refd Refd Refd Refd Dep

Level TYPE OWNER NAME Type Owner Name Link Type View Text

----- ------- ------------ ------------------ ------- ------ ------------------ ---------- ---- --------------------------------------------------------------------------------

1 VIEW SYSADM PS_POSN_HISTORY3 SELECT A.Position_Nbr ,A.Position_Entry_Dt ,A.Emplid ,A.EMPL_RCD ,B.EFFDT ,B.EFF

SEQ ,B.Sal_Admin_Plan ,B.Grade ,B.Step ,B.Comprate ,B.Comp_Frequency ,B.Currency

_Cd ,' ' ,' ' ,' ' ,' ' FROM PS_POSN_HISTORY2 A ,PS_JOB B WHERE B.EmplID = A.Emp

lID AND B.EMPL_RCD = A.EMPL_RCD AND B.Position_Nbr = A.Position_Nbr AND B.EffDt

= ( SELECT MAX(C.EffDt) FROM PS_JOB C WHERE C.EmplID = B.EmplID AND C.EMPL_RCD =

B.EMPL_RCD AND (C.EffDt < A.Position_End_Dt OR (C.EffDt = A.Position_End_Dt AND

C.EffSeq = A.EffSeq - 1))) AND B.Effseq = ( SELECT MAX(C.Effseq) FROM PS_JOB C

WHERE C.EmplID = B.EmplID AND C.EMPL_RCD = B.EMPL_RCD AND (C.EffDt < A.Position_

End_Dt OR (C.EffDt = A.Position_End_Dt AND C.EffSeq = A.EffSeq - 1)))

.2 VIEW SYSADM PS_POSN_HISTORY3 TABLE SYSADM PS_JOB HARD

.2 VIEW VIEW SYSADM PS_POSN_HISTORY2 HARD SELECT A.Position_Nbr , A.Position_Entry_Dt , A.Emplid , A.EMPL_RCD , B.EffDt ,

B.EffSeq , B.Action FROM PS_POSN_HISTORY A , PS_JOB B WHERE A.EmplID = B.EmplID

AND A.Empl_Rcd = B.Empl_Rcd AND B.EffDt = ( SELECT MIN(C.EffDt) FROM PS_JOB C WH

ERE C.EmplID = B.EmplID AND C.Empl_Rcd = B.Empl_Rcd AND (C.EffDt > A.Position_En

try_Dt OR (C.EffDt = A.Position_Entry_Dt AND C.EffSeq > A.EffSeq)) AND ((C.Posit

ion_Nbr <> A.Position_Nbr) OR (C.HR_STATUS <> 'A'))) AND B.EffDt<=TO_DATE(TO_CHA

R(SYSDATE,'YYYY-MM-DD'),'YYYY-MM-DD') AND B.EffSeq = ( SELECT MIN(D.EffSeq) FROM

PS_JOB D WHERE D.EmplID = B.EmplID AND D.Empl_Rcd = B.Empl_Rcd AND D.EffDt = B.

EffDt AND ((D.Position_Nbr <> A.Position_Nbr) OR (D.HR_STATUS <> 'A')))

..3 VIEW SYSADM PS_POSN_HISTORY2 TABLE SYSADM PS_JOB HARD

..3 VIEW VIEW SYSADM PS_POSN_HISTORY HARD SELECT A.Position_Nbr ,A.Position_Entry_Dt ,A.Emplid ,A.EMPL_RCD ,A.EFFSEQ ,A.EF

FDT ,A.Sal_Admin_Plan ,A.Grade ,A.Step ,A.CompRate ,A.Comp_Frequency ,A.Currency

_Cd ,' ' ,' ' ,' ' ,' ' FROM PS_Job A WHERE A.Position_Entry_Dt = A.Effdt AND A.

Effseq = ( SELECT MIN(B.Effseq) FROM PS_Job B WHERE B.Emplid = A.Emplid AND B.EM

PL_RCD = A.EMPL_RCD AND B.Effdt = A.Effdt AND B.Position_Nbr = A.Position_Nbr)

...4 VIEW SYSADM PS_POSN_HISTORY TABLE SYSADM PS_JOB HARDOracle Active Data Guard in PeopleSoft with Oracle 19c DML Redirection

Active Data Guard is a configuration option that became available in Oracle 11g where a standby database is maintained as a synchronised physical replica of the primary database and is also open for read-only SQL queries.

PeopleSoft added configuration to direct certain read-only components and processes to an ADG standby using secondary connections in the application servers and process schedulers. However, in PeopleSoft, all scheduled processes update at least the process scheduler request tables, even if they make no updates to application tables. This cannot be done on a read-only standby database and must be directed back to the primary database.

PeopleBooks sets out a method for Implementing Active Data Guard (this link is to the PeopleTools 8.58 documentation). It uses a second schema to which PeopleSoft application servers and process schedulers connect using a second access profile. The second schema contains synonyms for each table and view that either point to the corresponding object in the original schema on the ADG standby, or if the object is going to be updated by the application then via database links to the corresponding object in the primary database. This approach requires knowledge of which tables are updated during otherwise read-only processing, a lot of scripting to generate all the synonyms and grants, and ongoing maintenances as new objects are added to the database.

However, that approach is rendered obsolete by Active Data Guard DML redirection, a new feature in 19c. This post explains how to configure PeopleSoft to make use of Active Data Guard on Oracle 19c with DML redirect.

With DML redirection enabled, updates on the secondary database are automatically redirected back to the primary database via a SQL*Net connection between the databases (not unlike a database link), and then they will be replicated back to the standby database like any other change. PeopleSoft no longer needs to be configured specially to handle updated tables differently. Consequently, the PeopleSoft ADG configuration is massively simplified.

Processes no longer have to be strictly read-only to run on the ADG database. If there are only a small quantity of updates the redirect can handle it.

Database Initialisation ParametersSQL>select * from ps.psdbowner;

DBNAME OWNERID

-------- --------

FINPRD SYSADM

FINADG SYSADM[Startup]

;=========================================================================

; Database Signon settings

;=========================================================================

DBName=FINPRD

DBType=ORACLE

UserId=PSAPPS

UserPswd={V2}FEhk7rIFt2f0GRYaH6B9la8DXXMNtsz1kPZ+

ConnectId=PEOPLE

ConnectPswd={V2}Mw3RFr0MHFBpJHbqXh7Dx9qCsO7TFT4G

StandbyDBName=FINADG

StandbyDBType=ORACLE

StandbyUserId=PSAPPS

StandbyUserPswd={V2}Ski/r2xYCvbTbBhXOGfH8HO7zCRxoDFK5rmb- Oracle Support Note: E-AE: Application Engine Process Might Stay in Initiated Status if PSAESRV Disabled on PeopleSoft ADG Enabled Environment (Doc ID 1641764.1)

- This was raised as Bug 18482301: PSAE may stay in initiated status on ADG configured environment. It was closed as 'not a bug'.

- Using Two Temporary Tablespace in PeopleSoft.

Error in sign on

Database Type: 7 (ORACLE)

Database Name: HCM91

Server Name:

OperID:

ConnectID: people

Process Instance: 0

Reason: Invalid user ID or password for database signon. (id=)

Note: Attempt to authenticate using GUID 6a1ced41-2fe0-11e2-9183-be3e31d6e740

Invalid command line argument list.

process command line: -CT ORACLE -CD HCM91 -GUID 6a1ced41-2fe0-11e2-9183-be3e31d6e740 -SS NO -SN NO

GUID command line : -CT ORACLE -CD HCM91 -CO "PS" -CP Unavailable -R 1 -I 852 -AI AEMINITEST -OT 6 -FP

"C:\app\pt\appserv\prcs\HCM91PSNT\log_output\AE_AEMINITEST_852\" -OF 1[Startup]

;=========================================================================

; Database Signon settings

;=========================================================================

DBName=FINADG

DBType=ORACLE

UserId=PSAPPS

UserPswd={V2}YoAQq7Ut4WBHJL89N9gv9E0AWwLaecGZ4qep

ConnectId=PEOPLE

ConnectPswd={V2}OlSYHuFMZa2c8uonfYkKk+3+APYvTU9N

StandbyDBName=

StandbyDBType=

StandbyUserId=

StandbyUserPswd=- Processes in the new ADGOnly process category.

- A single LOADCACHE category process will be permitted.

- The max concurrence of all other categories will be 0.

- Other process types such as SQR might be needed if they are to be run on the ADG standby

[Startup]

;=========================================================================

; Database Signon settings

;=========================================================================

DBName=FINPRD

DBType=ORACLE

UserId=PSAPPS

UserPswd={V2}EcxeV3mit3GMT5kDfz/z+s0L9B1aUb6ix04f

ConnectId=PEOPLE

ConnectPswd={V2}NtXafW7hlcGY016bhazl2kqqvlSNYMK1

StandbyDBName=

StandbyDBType=

StandbyUserId=

StandbyUserPswd=- ADGOnly concurrency will be 0, or it will be the maximum API aware concurrency on ADG process schedulers.

- PSQUERY, any read-only processes, and any processes in ADGOnly category, will all be marked as being both read-only and in the ADGOnly category.

update pslock

set version = version + 1

where objecttypename IN('SYS','PPC')

/

update psversion

set version = version + 1

where objecttypename IN('SYS','PPC')

/

update ps_servercategory c

set maxconcurrent = CASE WHEN servername like 'PSUNX_A%'

THEN (SELECT s.maxapiaware FROM ps_serverdefn s

WHERE s.servername = c.servername)

ELSE 0 END

where prcscategory = 'ADGOnly'

/

update ps_serverdefn

set version = (SELECT version from psversion where objecttypename = 'PPC')

, lastupddttm = systimestamp

/

update ps_prcsdefn

set version = (SELECT version from psversion where objecttypename = 'PPC')

, prcsreadonly = 1

, prcscategory = 'ADGOnly'

, lastupddttm = systimestamp

where prcsreadonly = 1

or prcscategory = 'ADGOnly'

or prcsname = 'PSQUERY'

/

select prcstype, prcsname, prcscategory, prcsreadonly

from ps_prcsdefn

where prcsreadonly = 1

or prcscategory = 'ADGOnly'

or prcsname = 'PSQUERY'

/

select * from ps_servercategory

where prcscategory IN('ADGOnly')

order by 2,1

/

commit

/PSOFT-FINADG>Select prcstype, prcsname, prcscategory, prcsreadonly

2 From ps_prcsdefn

3 where prcsreadonly = 1

4 or prcscategory = 'ADGOnly'

5 or prcsname = 'PSQUERY'

6 /

PRCSTYPE PRCSNAME PRCSCATEGORY P

------------------------------ ------------ ------------------------------ -

Application Engine AEMINITEST ADGOnly 1

COBOL SQL PTPDBTST ADGOnly 1

SQR Report PTSQRTST ADGOnly 1

Application Engine FB_GEN_EXTR ADGOnly 1

SQR Report XRFWIN ADGOnly 1

SQR Report SWPAUDIT ADGOnly 1

SQR Report SYSAUDIT ADGOnly 1

SQR Report XRFAPFL ADGOnly 1

SQR Report XRFAEPL ADGOnly 1

SQR Report XRFPGDZ ADGOnly 1

SQR Report DDDAUDIT ADGOnly 1

SQR Report XRFEVPC ADGOnly 1

SQR Report XRFFLPC ADGOnly 1

SQR Report XRFFLPN ADGOnly 1

SQR Report XRFFLRC ADGOnly 1

SQR Report XRFIELDS ADGOnly 1

SQR Report XRFMENU ADGOnly 1

SQR Report XRFPANEL ADGOnly 1

SQR Report XRFPCFL ADGOnly 1

SQR Report XRFPNPC ADGOnly 1

SQR Report XRFRCFL ADGOnly 1

SQR Report XRFRCPN ADGOnly 1

Application Engine PSQUERY ADGOnly 1

Application Engine PSCONQRS ADGOnly 1

SYSADM-FINADG>select * from ps_servercategory

2 where prcscategory IN('ADGOnly')

3 order by 2,1

4 /

SERVERNA PRCSCATEGORY P MAXCONCURRENT

-------- ------------------------------ - -------------

…

PSNT ADGOnly 5 0

PSUNX ADGOnly 5 0

PSUNX_A1 ADGOnly 5 5

…PeopleTools 8.58.07 - Application Engine

Copyright (c) 1988-2021 Oracle and/or its affiliates.

All Rights Reserved

PeopleTools SQL Trace value: 159 (0x9f): /cs/psoft/cfg/FINPRD/appserv/prcs/FINPRDA1/log_output/AE_PSQUERY_xxxxxxxx/AE_PSQUERY_xxxxxxxx.trc

PeopleTools PeopleCode Trace value: 64 (0x40): /cs/psoft/cfg/FINPRD/appserv/prcs/FINPRDA1/log_output/AE_PSQUERY_xxxxxxxx/AE_PSQUERY_xxxxxxxx.trc

File: /vob/peopletools/src/pssys/qpm.cppSQL error. Stmt #: 8495 Error Position: 3055 Return: 16000 - ORA-16000: database or pluggable database open for read-only access

Failed SQL stmt: SELECT …

<a query that references a remote database via a database link>

…

Error in running query because of SQL Error, Code=16000, Message=ORA-16000: database or pluggable database open for read-only access (50,380)

PeopleCode Exit(1) Abort invoked by Application at PSQUERY.MAIN.ExecQry. (108,543)

Process xxxxxxxx ABENDED at Step PSQUERY.MAIN.ExecQry (PeopleCode) -- RC = 16 (108,524)

Process %s ABENDED at Step %s.%s.%s (Action %s) -- RC = %sPeopleTools 8.58.07 - Application Engine

Copyright (c) 1988-2021 Oracle and/or its affiliates.

All Rights Reserved

PeopleTools SQL Trace value: 159 (0x9f): /cs/psoft/cfg/FINPRD/appserv/prcs/FINPRDA2/log_output/AE_PSQUERY_19356674/AE_PSQUERY_19356674.trc

File: /vob/peopletools/src/pssys/qdmutil.cppSQL error. Stmt #: 4608 Error Position: 91 Return: 16397 - ORA-16397: statement redirection from Oracle Active Data Guard standby database to primary database failed

Failed SQL stmt: SELECT EXECCOUNT, AVGEXECTIME, AVGFETCHTIME, LASTEXECDTTM, AVGNUMROWS, OPRID, QRYNAME FROM PSQRYSTATS WHERE OPRID = :1 AND QRYNAME = :2 FOR UPDATE OF EXECCOUNT

Application Engine program PSQUERY ended normally- Navigate to: PeopleTools, Utilities, Administration, Query Administration,

- Go to the last tab (Settings),

- Uncheck Run Query Statistics.

update pslock

set version = version + 1

where objecttypename IN('SYS','QDM')

/

update psversion

set version = version + 1

where objecttypename IN('SYS','QDM')

/

update psqrydefn

set version = (SELECT version from psversion where objecttypename = 'QDM')

, execlogging = 'Y'

, lastupddttm = systimestamp

where execlogging != 'Y'

/Running nVision in a Mixed OpenXML/Excel mode

- Support Note: E-NV: nVision Performance using Microsoft Office 2010 and above (Doc ID 1537491.1)

- Oracle's PeopleSoft Technology Blog: OpenXML Mode in Peoplesoft nVision Looks Hard, but it Isn't!

- PeopleSoft DBA Blog: nVisionPerformance Tuning: 11. Excel -v- OpenXML

- 0 = Open XML Mode (see Oracle Support Note 1317246.1)

- 1 = Excel Mode

- 2 = Cross-Platform OpenXML (see Oracle Support Note 1304907.1

…

[nVision]

;=========================================================================

; General settings for nVision

;=========================================================================

…

UseExcelAutomation=2

…- Maximum concurrency for the Excel server has been reduced to 1.

- The maximum number of API aware processes for PSNT_E1 has also be reduced to 1.

- The 'Default' category is disabled on both PSNT_E1 and PSNT_X1.

- These servers only run nVision and PSJob process types,

- They load balance within the same operating system (although this is not appropriate for PSNT_X1 if you also run OpenXML on other non-Windows process schedulers).

- nvisionprcsdefnclone.sql creates the cloned process and server definitions, it can also be configured to create multiple pairs of server definitions etc.

- nvision_prcscategory.sql updates the concurrency on existing process definitions.

- PSPRCSQUE is polled by the process schedulers when looking for work.

- PSPRCSRQST reports the status of processes and is exposed in the Process Monitor component in PeopleSoft.

- PRCSNAME determines the name of the process to be run.

- PRCSCATEGORY specifies the category of the process in the request.

- Change the process name from RPTBOOK to RPTBOOKE, or from NVSRUN to NVSRUNE.

- Change the process category from nVisionOpenXML to nVisionExcel. It is changing the process category that makes the difference to which process scheduler picks up the request, but the name is also changed to maintain consistency.

- If the process request specifies a particular process scheduler (specified in SERVERNAMERQST) that cannot run the updated process category, then the requested server name is blanked out.

- The triggers will only fire when requests for nVision-Report/NVSRUN or nVision-ReportBook/RPTBOOK are submitted.

- There is an almost identical trigger on each table (apart from the conditionally compiled debug code). I will only exhibit the trigger on PSPRCSRQST in this post.

- Normally, one would not code WHEN OTHERS THEN NULL exceptions handlers in PL/SQL. However, I do this on triggers on process scheduler tables to prevent any error being raised causing the scheduler to crash.

- Conditionally compiled debug code in the trigger is not compiled if the mydebug flag is set to FALSE.

ALTER SESSION SET PLSQL_CCFLAGS = 'mydebug:FALSE';

CREATE OR REPLACE TRIGGER sysadm.gfc_nvision_excel_redirect_rqst

BEFORE INSERT ON s.psprcsrqst

FOR EACH ROW

WHEN (new.prcstype IN('nVision-Report','nVision-ReportBook')

AND new.prcsname IN('RPTBOOK','NVSRUN')

)

DECLARE

l_excel INTEGER := 0;

BEGIN

$IF $$mydebug $THEN dbms_output.put_line('Entering Trigger psoft.gfc_nvision_excel_redirect_rqst'); $END

IF :new.prcstype = 'nVision-ReportBook' THEN

--check for reportbook running report that uses layout on Excel list

SELECT 1

INTO l_excel